Summative Assessment

Summative assessments are one of the most critical evaluations of learner progress against standards. They occur after many rounds of formative assessment and, as such, generally feel like higher-stakes tests of knowledge. That said, remediation can and does still occur after summative assessments. I always want my scholars to feel motivated to perform at their best on summative assessments, but I also work hard to remind them that everyone always has room for improvement and can master difficult content with enough hard work.

During the summer of 2017, my school district hired me to write new summative assessments for all three grades of middle school math using an online platform called EdCite. Thus, I am intimately familiar with (and, in a sense, uniquely responsible for) the close alignment between grade-level learning objectives and the summative assessments that my students take.

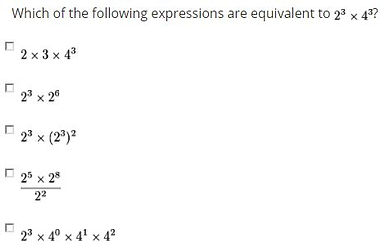

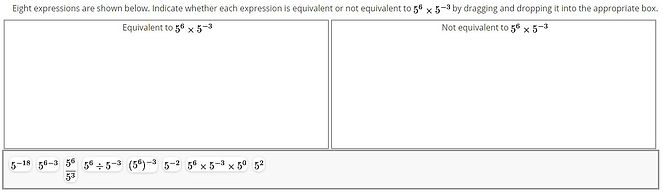

Sample EdCite assessment items from an eight-grade test about exponent properties. The layout and question types are calibrated to match PARCC tests as precisely as possible. Students take EdCite tests online, and score reports are automatically generated and appear on my computer in real time. This allows me to monitor the testing environment very carefully and even track data like which questions take students the longest to complete, which wrong answers are most popular, and which students score best on or struggle with particular standards.

Although students submit their answers electronically, I require mine to use scratch paper as well. This scratch paper is turned in at the conclusion of the test, and I give students a classwork grade for it. This allows me to teach students about how they can use their scratch paper effectively rather than simply trying to figure out all of the answers in their heads. Over the course of the year, I anticipate needing to give less and less instruction about the proper use of scratch paper, and I believe that my scholars will all be ready to do a lot of work by hand for the computer-based PARCC test at the end of the year.

Samples of student scratch paper from a test. Many students do an excellent job of showing the work that they have done, but most fail to organize it in any meaningful way. Thus, one of my emphases throughout the year will be on organizational strategies that scholars can use to focus on each problem separately. Just like any other component of an assessment task, exemplary scratch paper is eligible for shout-outs and prizes. This enables me to show students what excellent on-paper work during a test looks like.

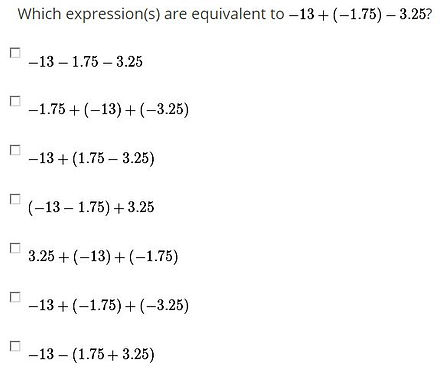

Writing summative assessments during the summer was an excellent first step in the "backwards planning" process, in which instruction is mapped to the knowledge, skills, and abilities that scholars must display in order to succeed on assessments. I am always aware of the demands of particular assessments, which enables me to prepare students intentionally for the tasks that they will be asked to accomplish. For example, a recent seventh-grade assessment contained several questions for which students were required to select all of the expressions that were equivalent to a given expression.

Sample "select-all" item from seventh-grade summative assessment.

Because I knew that my students would be seeing items like this, I was able to design a classroom activity to build student comfort with the concept of selecting multiple answers to a single problem. I wrote an expression on the board and gave each student a different expression. They were all responsible for figuring out if their expression had the same value as mine. Thus, students' understanding of equivalent expressions was reinforced, and they were very ready to select multiple answers to a single problem when the time came to do so on the assessment.

Once my scholars have finished an EdCite assessment, grading it is quite simple. The computer scores many question types (e.g., multiple choice, drag-and-drop, fill-in-the-blank, etc.), and I can read students' typed responses to short-answer questions and then write my own responses and comments back to them. My students do not have a lot of experience receiving feedback in this manner, but most really like being able to log on to any computer and see exactly what I have written to them whenever they wish. Students' final scores can be organized in any number of different ways; I find it most useful to look at them by content standard, which is possible because all questions are tagged with the relevant standards. This allows me to identify trends for re-teaching with ease.

Samples feedback from me typed into student answers. My comments are in red, and students can return to them to understand what they did correctly or incorrectly and how they might fix it on subsequent problems and assessments.

Screenshot from the "Live Assignment Dashboard" on EdCite. I can use this data to monitor the testing environment in real time, and it gives me a sense of student scores even before all of the tests are complete.

Screenshot of student scores broken down by standard. Score reports can take many forms, but I find this one the most helpful for subsequent instructional planning. Note that almost none of the students have completely mastered standard 8.G.A.2, which suggested an instructional opportunity for subsequent lessons.

Just as not all formative assessments are exit tickets, not all summative assessments are sit-down tests. I frequently integrate performance tasks into my plans for summative assessment because they provide another type of data that can be used to support, verify, and document student learning. They are also often more rigorous tests of the Common Core Standards for Mathematical Practice than a traditional test would be. I use performance tasks as summative assessments for roughly half of my units during the year. Below is a sample of work submitted by my sixth graders for one such task.